Author(s): Zixuan Wang, BASIS Independent Silicon Valley G12

Background

Scientific discovery has progressed through significant advancements, driven by the desire to understand the natural world. Early efforts, such as Newton’s study of motion and gravity, relied on careful observation and reasoning to discover fundamental principles.

As science advanced, theoretical models like the laws of thermodynamics were developed to explain complex phenomena and predict natural behaviors, emphasizing universal principles expressed through mathematical formulas, models, and algorithms. It seeks to formalize knowledge and derive conclusions through deductive reasoning. These models marked a shift from observation to deeper analysis.

With growing challenges like the increasing complexity of different systems, where everything is linked. For example, ecosystems depend on interactions between animals, plants, climate, and human activity, requiring the measurement and modeling of countless variables, new methods like computational methods became essential. They allowed scientists to simulate complex systems, such as molecular interactions and ecosystems, where many variables interact in complicated ways.

While modern scientific discoveries becoming even more complicated and data-based, such as those questions involving abstract and hidden phenomena like dark matter, astrophysics, and protein foldings, the rise of integration of artificial intelligence (AI) in big data-driven science, a data-intensive calculation based on data, combining experiment, theory, and computer simulation, marked a transformative shift from traditional scientific progress that is guided by hypothesis-driven experimentation and theoretical development. This enabled researchers to begin analyzing massive datasets using AI to recognize patterns and perform simulations and predictions in numbers of scientific fields like biology, earth science, physics, math, and chemistry. For instance, climate and environment science benefits from AI’s ability to model complex systems, predict environmental changes, and assess human impacts. Similarly, tools like AlphaFold and bioimaging have transformed protein folding research, advancing drug discovery and molecular biology.

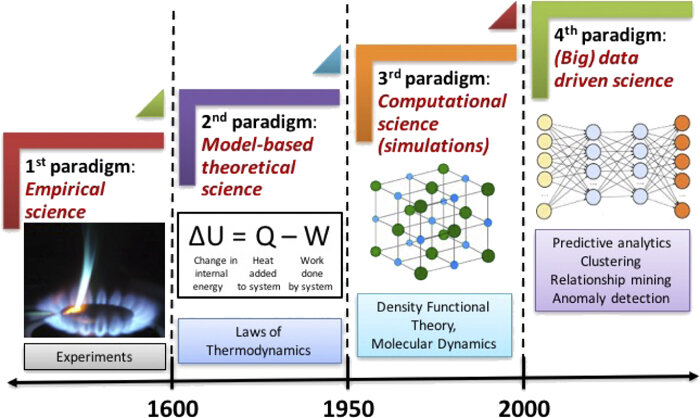

In 2009, the book The Fourth Paradigm: Data-Intensive Scientific Discovery published by Tom Hey [12] identified and categorized scientific discoveries and processes into 4 basic paradigms (experimental science, theoretical science, computational science, and data-intensive science, also indicated in Figure 1). Researchers from different institutions all over the world are now trying to push the boundaries of the 4th paradigm to use AI to accelerate simulations of fundamental equations of nature, which complements and amplifies the 1st to 4th paradigms.

Figure 1: The four paradigms of science: empirical, theoretical, computational, and data-driven.

Mission

Our mission is to advance the AI4Science field by gathering and analyzing research papers from leading AI conferences: The Conference on Neural Information Processing Systems (NeurIPS), the International Conference on Machine Learning (ICML), and the International Conference on Learning Representations (ICLR). By tracking the growth and evolution of AI4Science, our objective is to assess the AI industry’s perspective on scientific discovery and foster a collaborative community that bridges AI and science, inspiring innovation across both fields.

Preliminary Analysis

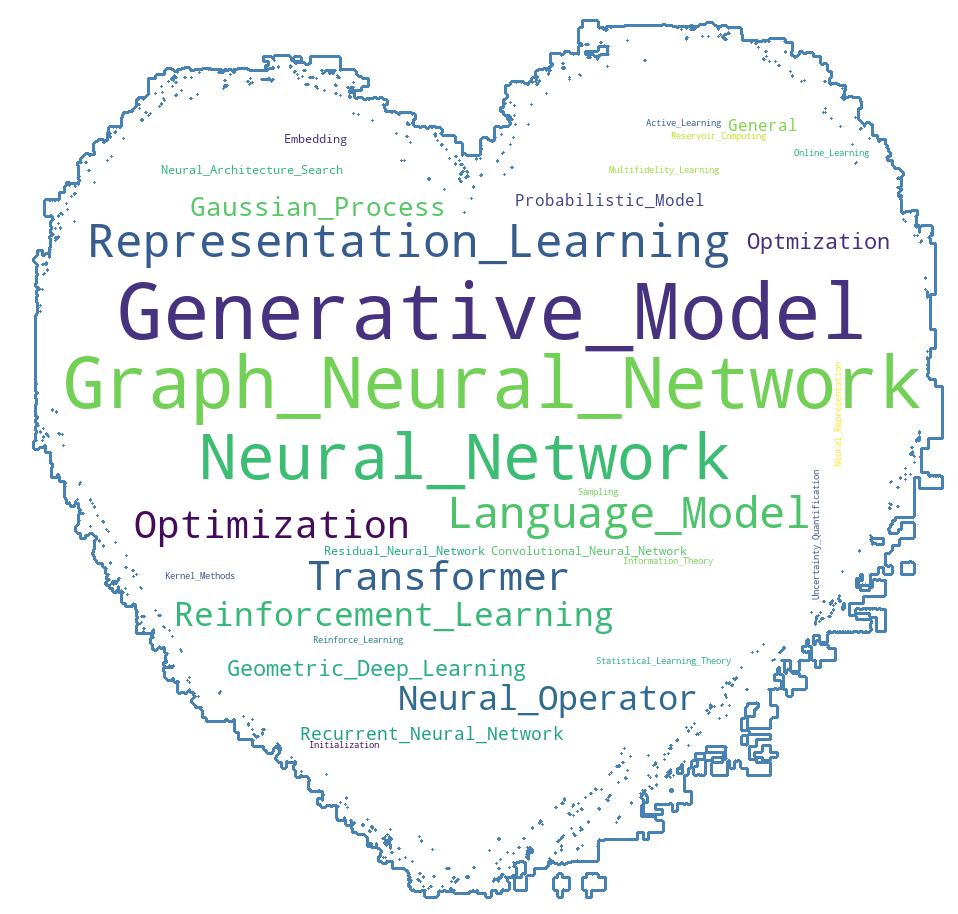

Figure 2: Word cloud showing popular machine learning technologies used in the papers.

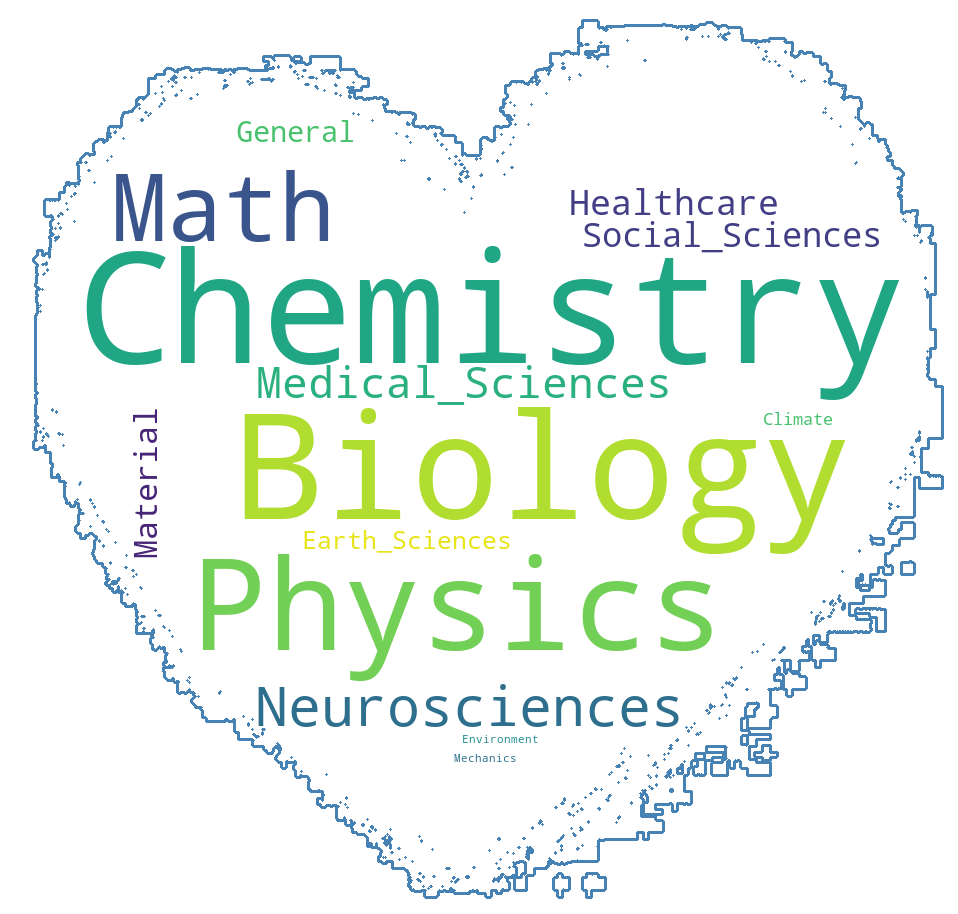

Figure 3: Word cloud showing popular fields applied in the papers.

Key AI techniques discussed in AI4Science papers include “Graph Neural Networks,” “Generative Models,” “Neural Networks,” and “Reinforcement Learning.” These methods have been applied across a diverse array of fields, such as “Chemistry,” “Biology,” “Physics,” and “Neuroscience.” The visualization underscores AI’s multidisciplinary nature, extending to areas like “Medical Sciences” and “Healthcare,” highlighting its growing role in addressing complex, data-driven challenges.

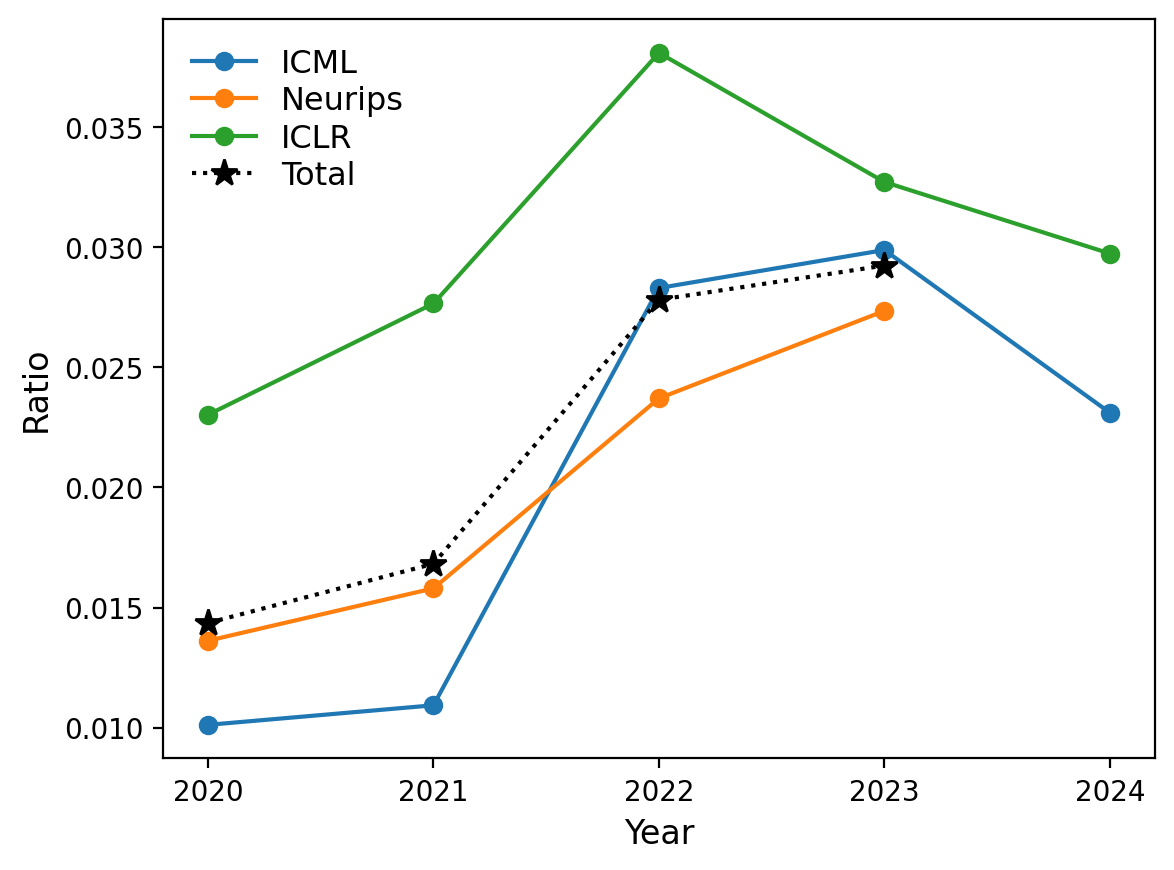

Figure 4: AI4Science paper ratios across conferences.

The percentage of AI4Science papers in papers from the three AI conferences shows a steady and linear increase each year. Innovation adoption typically follows a logistic s-curve model. As indicated by Figure 3, the increase in percentage from 2020 to 2021 is relatively smaller compared to the later time intervals, marking the beginning of adopting AI4Science papers, followed by steady growth in 2021 to 2022 and 2022 to 2023. We expect that the percentage of AI4Science papers will continue to grow and finally be saturated with a certain percentage in the future.

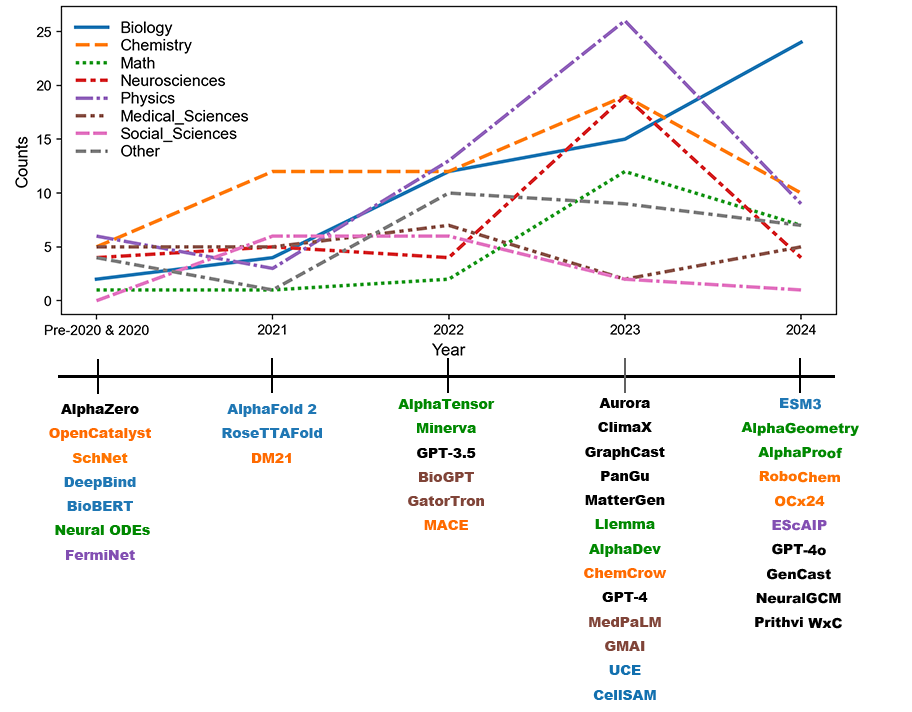

Figure 5: Trends in AI4Science papers across fields from 2020–2024.

This timeline illustrates trends in research papers related to fields like biology, chemistry, math, neurosciences, physics, medical sciences, and social sciences from 2020 to 2024. Notable AI models and studies for fields like biology [13, 4, 2, 17, 27, 22, 11], chemistry [14, 5, 20, 8, 29, 34, 1], math [9, 10, 18, 3, 21, 35], medical sciences [19, 36, 33, 22], social sciences, material [37], physics [24, 26], climate [6, 7, 16, 23, 25, 15, 28], and other [32] are marked along the timeline, showing how these innovations align with field-specific growth.

Fields Description & Analysis

-

Biology: Major breakthroughs such as AlphaFold 2 and RoseTTAFold in 2021 transformed protein structure prediction, enabling significant advancements in drug design and molecular biology. Foundation models like Universal Cell Embeddings (UCE) [27] released in 2023 further expanded biological research by analyzing gene expression and cellular functions. By 2024, Biology leads in AI4Science research with over 25 published papers.

-

Chemistry: Chemistry saw a notable rise in research paper output from 2020 to 2023, peaking around 2022. Key models like DeepMind 21 in 2021 [14] addressed limitations in Density Functional Theory (DFT), accelerating progress in material science, reaction prediction, and drug discovery. In the field of retrosynthesis, Marwin Segler’s work [31] in 2018 marked a major breakthrough by combining deep learning with symbolic reasoning. This approach has enabled accurate predictions of chemical transformations and efficient synthesis pathway design. Autonomous systems like RoboChem [34] in 2024 showcased AI’s ability to automate chemical synthesis, significantly reducing the time needed for experimentation.

-

Math: Research in Math remains stable with relatively low output despite its growth in 2023. Reinforcement learning (RL) has greatly impacted mathematics, enabling advancements in theorem proving, symbolic computation, and dynamic systems. RL models like AlphaProof (2024) and AlphaGeometry (2024) applied AI to complex mathematical challenges, such as theorem proving and geometric problem-solving, achieving a silver medal standard in the 2024 International Mathematical Olympiad (IMO).

-

Physics: Physics research has been popular throughout the years, often involving applications such as fluid dynamics and quantum mechanics. AI tools played a crucial role in solving partial differential equations (PDEs) to mathematically describe the behavior of continuous systems like fluids, elastic solids, temperature distributions, electromagnetic fields, and quantum mechanical probabilities. Despite the significance of AI for PDEs, other significant fields include astrophysics (e.g., the imaging of the M87 black hole by the Event Horizon Telescope) and high energy physics, where AI addresses the challenges brought by massive data generated at particle accelerator facilities.

-

Medical Sciences: A field highly relevant to biology and chemistry, despite lower adoption compared to fields like biology and chemistry. Key innovations such as AI-driven bioimaging and medical language models like BioGPT [19] and Gatortron [36] have improved efficiency in disease diagnosis, treatment planning, and patient triage by analyzing medical records, symptoms, and test results. Industry leaders like NVIDIA and IBM are advancing AI advancements in healthcare.

-

Social Sciences: Interest peaked in 2020 and 2021 but declined thereafter, with attention shifting to more data-intensive disciplines. Popular applications include data analysis and pattern recognition, where AI algorithms process large datasets to uncover trends and correlations that inform social theories.

-

Neurosciences: This is also a popular field, highly integrated with AI for a long time. Applications typically focus on brain activity, behaviors, and brain data. One popular application is using AI to reconstruct visual experiences from human brain waves. By interpreting brain activity data as “texts” in latent space, generative models like latent diffusion models can produce realistic images conditioned on brain activity without requiring complex neural networks.

-

Earth Sciences: Though seemingly unpopular, this field tends to be a data-based application rather than a theory-based study. In climate science, major institutions and companies such as Google DeepMind, Microsoft, and Huawei invest to develop AI-driven industry solutions (specifically AI for PDEs), such as Aurora [7], ClimaX [23], GraphCast [16], and PanGu [6]. From general weather forecasting to specific fields such as hydrology and sub-seasonal climate prediction, we see great advances in this field.

-

Other: We note that there are still many other interesting developments in other areas, such as engineering and materials, but they are somehow less noticeable in the AI4Science industry.

Conclusion

Our analysis highlights the strengths of AI in accelerating scientific discovery by integrating vast datasets and improving experimental design. AI has reduced barriers in traditionally complex areas such as protein folding and chemical experiments, enabling breakthroughs at unprecedented speeds. Despite these advancements, the field also faces bottlenecks, including reliance on high-quality data, uneven field adoption, and potential over-focus on popular areas like biology.

In the future, to foster a more balanced development across different fields, the AI4Science community has to actively spotlight underrepresented fields and explore their unique problems that can fit into AI-driven research. This approach will incentivize those fields and ensure that AI4Science evolves in a sustainable and inclusive manner, avoiding over-emphasis on a few popular fields. By providing equitable opportunities, the AI4Science community can nurture a healthier and more innovative trend in the future.

References

[1] Jehad Abed, Jiheon Kim, Muhammed Shuaibi, Brook Wander, Boris Duijf, Suhas Mahesh, Hyeonseok Lee, Vahe Gharakhanyan, Sjoerd Hoogland, Erdem Irtem, et al. Open catalyst experiments 2024 (ocx24): Bridging experiments and computational models. arXiv preprint arXiv:2411.11783, 2024.

[2] Babak Alipanahi, Andrew Delong, Matthew T Weirauch, and Brendan J Frey. Predicting the sequence specificities of dna- and rna-binding proteins by deep learning. Nature Biotechnology, 33(8):831–838, 2015.

[3] Zhangir Azerbayev, Hailey Schoelkopf, Keiran Paster, Marco Dos Santos, Stephen McAleer, Albert Q Jiang, Jia Deng, Stella Biderman, and Sean Welleck. Llemma: An open language model for mathematics. arXiv preprint arXiv:2310.10631, 2023.

[4] Minkyung Baek, Frank DiMaio, Ivan Anishchenko, Justas Dauparas, Sergey Ovchinnikov, Gyu Rie Lee, Jue Wang, Qian Cong, Lisa N Kinch, R Dustin Schaeffer, et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science, 373(6557):871–876, 2021.

[5] Ilyes Batatia, David P Kovacs, Gregor Simm, Christoph Ortner, and Gábor Csányi. Mace: Higher order equivariant message passing neural networks for fast and accurate force fields. Advances in Neural Information Processing Systems, 35:11423–11436, 2022.

[6] Kaifeng Bi, Lingxi Xie, Hengheng Zhang, Xin Chen, Xiaotao Gu, and Qi Tian. Accurate medium-range global weather forecasting with 3d neural networks. Nature, 619(7970):533–538, 2023.

[7] Cristian Bodnar, Wessel P Bruinsma, Ana Lucic, Megan Stanley, Johannes Brandstetter, Patrick Garvan, Maik Riechert, Jonathan Weyn, Haiyu Dong, Anna Vaughan, et al. Aurora: A foundation model of the atmosphere. arXiv preprint arXiv:2405.13063, 2024.

[8] L Chanussot, A Das, S Goyal, T Lavril, M Shuaibi, M Riviere, K Tran, J Heras-Domingo, C Ho, W Hu, et al. The open catalyst 2020 (oc20) dataset and community challenges. arXiv preprint arXiv:2010.09990, 2010.

[9] Ricky TQ Chen, Yulia Rubanova, Jesse Bettencourt, and David K Duvenaud. Neural ordinary differential equations. Advances in Neural Information Processing Systems, 31, 2018.

[10] Alhussein Fawzi, Matej Balog, Aja Huang, Thomas Hubert, Bernardino Romera-Paredes, Mohammadamin Barekatain, Alexander Novikov, Francisco J R Ruiz, Julian Schrittwieser, Grzegorz Swirszcz, et al. Discovering faster matrix multiplication algorithms with reinforcement learning. Nature, 610(7930):47–53, 2022.

[11] Tomas Hayes, Roshan Rao, Halil Akin, Nicholas J Sofroniew, Deniz Oktay, Zeming Lin, Robert Verkuil, Vincent Q Tran, Jonathan Deaton, Marius Wiggert, et al. Simulating 500 million years of evolution with a language model. bioRxiv, pages 2024–07, 2024.

[12] Tony Hey, Stewart Tansley, Kristin Michele Tolle, et al. The Fourth Paradigm: Data-Intensive Scientific Discovery. Microsoft Research, Redmond, WA, 2009.

[13] John Jumper, Richard Evans, Alexander Pritzel, Tim Green, Michael Figurnov, Olaf Ronneberger, Kathryn Tunyasuvunakool, Russ Bates, Augustin Žídek, Anna Potapenko, et al. Highly accurate protein structure prediction with alphafold. Nature, 596(7873):583–589, 2021.

[14] James Kirkpatrick, Brendan McMorrow, David HP Turban, Alexander L Gaunt, James S Spencer, Alexander GDG Matthews, Annette Obika, Louis Thiry, Meire Fortunato, David Pfau, et al. Pushing the frontiers of density functionals by solving the fractional electron problem. Science, 374(6573):1385–1389, 2021.

[15] Dmitrii Kochkov, Janni Yuval, Ian Langmore, Peter Norgaard, Jamie Smith, Griffin Mooers, Milan Klöwer, James Lottes, Stephan Rasp, Peter Düben, et al. Neural general circulation models for weather and climate. Nature, 632(8027):1060–1066, 2024.

[16] Remi Lam, Alvaro Sanchez-Gonzalez, Matthew Willson, Peter Wirnsberger, Meire Fortunato, Ferran Alet, Suman Ravuri, Timo Ewalds, Zach Eaton-Rosen, Weihua Hu, et al. Graphcast: Learning skillful medium-range global weather forecasting. arXiv preprint arXiv:2212.12794, 2022.

[17] Jinhyuk Lee, Wonjin Yoon, Sungdong Kim, Donghyeon Kim, Sunkyu Kim, Chan Ho So, and Jaewoo Kang. Biobert: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics, 36(4):1234–1240, 2020.

[18] Aitor Lewkowycz, Anders Andreassen, David Dohan, Ethan Dyer, Henryk Michalewski, Vinay Ramasesh, Ambrose Slone, Cem Anil, Imanol Schlag, Theo Gutman-Solo, et al. Solving quantitative reasoning problems with language models. Advances in Neural Information Processing Systems, 35:3843–3857, 2022.

[19] Renqian Luo, Liai Sun, Yingce Xia, Tao Qin, Sheng Zhang, Hoifung Poon, and Tie-Yan Liu. Biogpt: generative pre-trained transformer for biomedical text generation and mining. Briefings in Bioinformatics, 23(6):bbac409, 2022.

[20] Andres M. Bran, Sam Cox, Oliver Schilter, Carlo Baldassari, Andrew D White, and Philippe Schwaller. Augmenting large language models with chemistry tools. Nature Machine Intelligence, pages 1–11, 2024.

[21] Daniel J Mankowitz, Andrea Michi, Anton Zhernov, Marco Gelmi, Marco Selvi, Cosmin Paduraru, Edouard Leurent, Shariq Iqbal, Jean-Baptiste Lespiau, Alex Ahern, et al. Faster sorting algorithms discovered using deep reinforcement learning. Nature, 618(7964):257–263, 2023.

[22] Michael Moor, Oishi Banerjee, Zahra Shakeri Hossein Abad, Harlan M Krumholz, Jure Leskovec, Eric J Topol, and Pranav Rajpurkar. Foundation models for generalist medical artificial intelligence. Nature, 616(7956):259–265, 2023.

[23] Tung Nguyen, Johannes Brandstetter, Ashish Kapoor, Jayesh K Gupta, and Aditya Grover. Climax: A foundation model for weather and climate. arXiv preprint arXiv:2301.10343, 2023.

[24] David Pfau, James S Spencer, Alexander GDG Matthews, and W Matthew C Foulkes. Ab initio solution of the many-electron Schrödinger equation with deep neural networks. Physical Review Research, 2(3):033429, 2020.

[25] Ilan Price, Alvaro Sanchez-Gonzalez, Ferran Alet, Tom R Andersson, Andrew El-Kadi, Dominic Masters, Timo Ewalds, Jacklynn Stott, Shakir Mohamed, Peter Battaglia, et al. Gencast: Diffusion-based ensemble forecasting for medium-range weather. arXiv preprint arXiv:2312.15796, 2023.

[26] Eric Qu and Aditi S Krishnapriyan. The importance of being scalable: Improving the speed and accuracy of neural network interatomic potentials across chemical domains. arXiv preprint arXiv:2410.24169, 2024.

[27] Yanay Rosen, Yusuf Roohani, Ayush Agarwal, Leon Samotorčan, Tabula Sapiens Consortium, Stephen R Quake, and Jure Leskovec. Universal cell embeddings: A foundation model for cell biology. bioRxiv, pages 2023–11, 2023.

[28] Johannes Schmude, Sujit Roy, Will Trojak, Johannes Jakubik, Daniel Salles Civitarese, Shraddha Singh, Julian Kuehnert, Kumar Ankur, Aman Gupta, Christopher E Phillips, et al. Prithvi WXC: Foundation model for weather and climate. arXiv preprint arXiv:2409.13598, 2024.

[29] Kristof Schütt, Pieter-Jan Kindermans, Huziel Enoc Sauceda Felix, Stefan Chmiela, Alexandre Tkatchenko, and Klaus-Robert Müller. Schnet: A continuous-filter convolutional neural network for modeling quantum interactions. Advances in Neural Information Processing Systems, 30, 2017.

[30] Scientific Figure on ResearchGate. Perspective: Materials informatics and big data: Realization of the “fourth paradigm” of science in materials science, 2025. Accessed: 2025-01-03.

[31] Marwin HS Segler, Mike Preuss, and Mark P Waller. Planning chemical syntheses with deep neural networks and symbolic AI. Nature, 555(7698):604–610, 2018.

[32] David Silver, Thomas Hubert, Julian Schrittwieser, Ioannis Antonoglou, Matthew Lai, Arthur Guez, Marc Lanctot, Laurent Sifre, Dharshan Kumaran, Thore Graepel, et al. Mastering chess and shogi by self-play with a general reinforcement learning algorithm. arXiv preprint arXiv:1712.01815, 2017.

[33] Karan Singhal, Shekoofeh Azizi, Tao Tu, S Sara Mahdavi, Jason Wei, Hyung Won Chung, Nathan Scales, Ajay Tanwani, Heather Cole-Lewis, Stephen Pfohl, et al. Large language models encode clinical knowledge. Nature, 620(7972):172–180, 2023.

[34] Aidan Slattery, Zhenghui Wen, Pauline Tenblad, Jesús Sanjosé-Orduna, Diego Pintossi, Tim den Hartog, and Timothy Noël. Automated self-optimization, intensification, and scale-up of photocatalysis in flow. Science, 383(6681):eadj1817, 2024.

[35] Trieu H Trinh, Yuhuai Wu, Quoc V Le, He He, and Thang Luong. Solving olympiad geometry without human demonstrations. Nature, 625(7995):476–482, 2024.

[36] Xi Yang, Aokun Chen, Nima PourNejatian, Hoo Chang Shin, Kaleb E Smith, Christopher Parisien, Colin Compas, Cheryl Martin, Mona G Flores, Ying Zhang, et al. Gatortron: A large clinical language model to unlock patient information from unstructured electronic health records. arXiv preprint arXiv:2203.03540, 2022.

[37] Claudio Zeni, Robert Pinsler, Daniel Zügner, Andrew Fowler, Matthew Horton, Xiang Fu, Sasha Shysheya, Jonathan Crabbé, Lixin Sun, Jake Smith, et al. Mattergen: a generative model for inorganic materials design. arXiv preprint arXiv:2312.03687, 2023.